The multivariate direct filter approach (MDFA) is a generic real-time signal extraction and forecasting framework endowed with a richly parameterized interface allowing for adaptive and fully-regularized data analysis in large multivariate time series. The methodology is based primarily in the frequency domain, where all the optimization criteria is defined, from regularization, to forecasting, to filter constraints. For an in-depth tutorial on the mathematical formation, the reader is invited to check out any of the many publications or tutorials on the subject from blog.zhaw.ch.

This MDFA-Toolkit (clone here) provides a fast, modularized, and adaptive framework in JAVA for doing such real-time signal extraction for a variety of applications. Furthermore, we have developed several components to the package featuring streaming time series data analysis tools not known to be available anywhere else. Such new features include:

- A fractional differencing optimization tool for transforming nonstationary time-series into stationary time series while preserving memory (inspired by Marcos Lopez de Prado’s recent book on Advances in Financial Machine Learning, Wiley 2018).

- Easy to use interface to four different signal generation outputs:

Univariate series -> univariate signal

Univariate series -> multivariate signal

Multivariate series -> univariate signal

Multivariate series -> multivariate signal - Generalization of optimization criterion for the signal extraction. One can use a periodogram, or a model-based spectral density of the data, or anything in between.

- Real-time adaptive parameterization control – make slight adjustments to the filter process parameterization effortlessly

- Build a filtering process from simpler user-defined filters, applying customization and reducing degrees of freedom.

This package also provides an API to three other real-time data analysis frameworks that are now or soon available

- iMetricaFX – An app written entirely in JavaFX for doing real-time time series data analysis with MDFA

- MDFA-DeepLearning – A new recurrent neural network methodology for learning in large noisy time series

- MDFA-Tradengineer – An automated algorithmic trading platform combining MDFA-Toolkit, MDFA-DeepLearning, and Esper – a library for complex event processing (CEP) and streaming analytics

To start the most basic signal extraction process using MDFA-Toolkit, three things need to be defined.

- The data streaming process which determines from where and what kind of data will be streamed

- A transformation of the data, which includes any logarithmic transform, normalization, and/or (fractional) differencing

- A signal extraction definition which is defined by the MDFA parameterization

Data streaming

In the current version, time series data is providing by a streaming CSVReader, where the time series index is given by a String DateTime stamp is the first column, and the value(s) are given in the following columns. For multivariate data, two options are available for streaming data. 1) A multiple column .csv file, with each value of the time series in a separate column 2) or in multiple referenced single column time-stamped .csv files. In this case, the time series DateTime stamps will be checked to see if in agreement. If not, an exception will be thrown. More sophisticated multivariate time series data streamers which account for missing values will soon be available.

Transforming the data

Depending on the type of time series data and the application or objectives of the real time signal extraction process, transforming the data in real-time might be an attractive feature. The transformation of the data can include (but not limited to) several different things

- A Box-Cox transform, one of the more common transformations in financial and other non-stationary time series.

- (fractional)-differencing, defined by a value d in [0,1]. When d=1, standard first-order differencing is applied.

- For stationary series, standard mean-variance normalization or a more exotic GARCH normalization which attempts to model the underlying volatility is also available.

Signal extraction definition

Once the data streaming and transformation procedures have been defined, the signal extraction parameters can then be set in a univariate or multivariate setting. (Multiple signals can be constructed as well, so that the output is a multivariate signal. A signal extraction process can be defined by defining and MDFABase object (or an array of MDFABase objects in the mulivariate signal case). The parameters that are defined are as follows:

- Filter length: the length L in number of lags of the resulting filter

- Low-pass/band-pass frequency cutoffs: which frequency range is to be filtered from the time-series data

- In-sample data length: how much historical data need to construct the MDFA filter

- Customization: α (smoothness) and λ (timeliness) focuses on emphasizing smoothness of the filter by mollifying high-frequency noise and optimizing timeliness of filter by emphasizing error optimization in phase delay in frequency domain

- Regularization parameters: controls the decay rate and strength, smoothness of the (multivariate) filter coefficients, and cross-series similarity in the multivariate case

- Lag: controls the forecasting (negative values) or smoothing (positive values)

- Filter constraints i1 and i2: Constrains the filter coefficients to sum to one (i1) and/or the dot product with (0,1…, L) is equal to the phase shift, where L is the filter length.

- Phase-shift: the derivative of the frequency response function at the zero frequency.

All these parameters are controlled in an MDFABase object, which holds all the information associated with the filtering process. It includes it’s own interface which ensures the MDFA filter coefficients are updated automatically anytime the user changes a parameter in real-time.

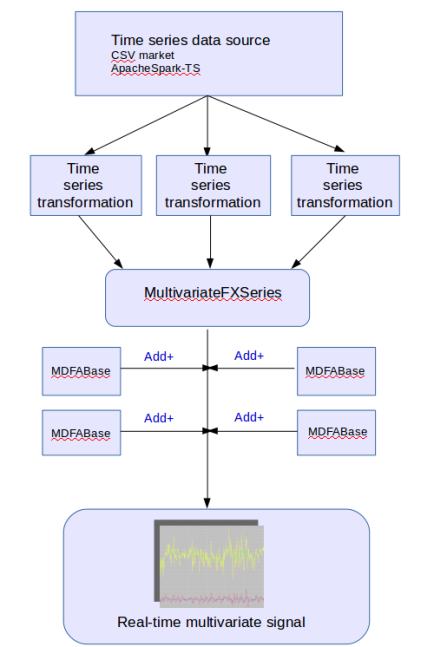

Figure 1: Overview of the main module components of MDFA-Toolkit and how they are connected

As shown in Figure 1, the main components that need to be defined in order to define a signal extraction process in MDFA-Toolkit. The signal extraction process begins with a handle on the data streaming process, which in this article we will demonstrate using a simple CSV market file reader that is included in the package. The CSV file should contain the raw time series data, and ideally a time (or date) stamp column. In the case there is no time stamp column, such a stamp will simply be made up for each value.

Once the data stream has been defined, these are then passed into a time series transformation process, which handles automatically all the data transformations which new data is streamed. As we’ll see, the TargetSeries object defines such transformations and all streaming data is passed added directly to the TargetSeries object. A MultivariateFXSeries is then initiated with references to each TargetSeries objects. The MDFABase objects contain the MDFA parameters and are added to the MultivariateFXSeries to produce the final signal extraction output.

To demonstrate these components and how they come together, we illustrate the package with a simple example where we wish to extract three independent signals from AAPL daily open prices from the past 5 years. We also do this in a multivariate setting, to see how all the components interact, yielding a multivariate series -> multivariate signal.

//Define three data source files, the first one will be the target series

String[] dataFiles = new String[]{"AAPL.daily.csv", "QQQ.daily.csv", "GOOG.daily.csv"};

//Create a CSV market feed, where Index is the Date column and Open is the data

CsvFeed marketFeed = new CsvFeed(dataFiles, "Index", "Open");

/* Create three independent signal extraction definitions using MDFABase:

One lowpass filter with cutoff PI/20 and two bandpass filters

*/

MDFABase[] anyMDFAs = new MDFABase[3];

anyMDFAs[0] = (new MDFABase()).setLowpassCutoff(Math.PI/20.0)

.setI1(1)

.setHybridForecast(.01)

.setSmooth(.3)

.setDecayStart(.1)

.setDecayStrength(.2)

.setLag(-2.0)

.setLambda(2.0)

.setAlpha(2.0)

.setSeriesLength(400);

anyMDFAs[1] = (new MDFABase()).setLowpassCutoff(Math.PI/10.0)

.setBandPassCutoff(Math.PI/15.0)

.setSmooth(.1)

.setSeriesLength(400);

anyMDFAs[2] = (new MDFABase()).setLowpassCutoff(Math.PI/5.0)

.setBandPassCutoff(Math.PI/10.0)

.setSmooth(.1)

.setSeriesLength(400);

/*

Instantiate a multivariate series, with the MDFABase definitions,

and the Date format of the CSV market feed

*/

MultivariateFXSeries fxSeries = new MultivariateFXSeries(anyMDFAs, "yyyy-MM-dd");

/*

Now add the three series, each one a TargetSeries representing the series

we will receive from the csv market feed. The TargetSeries

defines the data transformation. Here we use differencing order with

log-transform applied

*/

fxSeries.addSeries(new TargetSeries(1.0, true, "AAPL"));

fxSeries.addSeries(new TargetSeries(1.0, true, "QQQ"));

fxSeries.addSeries(new TargetSeries(1.0, true, "GOOG"));

/*

Now start filling the fxSeries will data, we will start with

600 of the first observations from the market feed

*/

for(int i = 0; i < 600; i++) {

TimeSeriesEntry observation = marketFeed.getNextMultivariateObservation();

fxSeries.addValue(observation.getDateTime(), observation.getValue());

}

//Now compute the filter coefficients with the current data

fxSeries.computeAllFilterCoefficients();

//You can also chop off some of the data, he we chop off 70 observations

fxSeries.chopFirstObservations(70);

//Plot the data so far

fxSeries.plotSignals("Original");

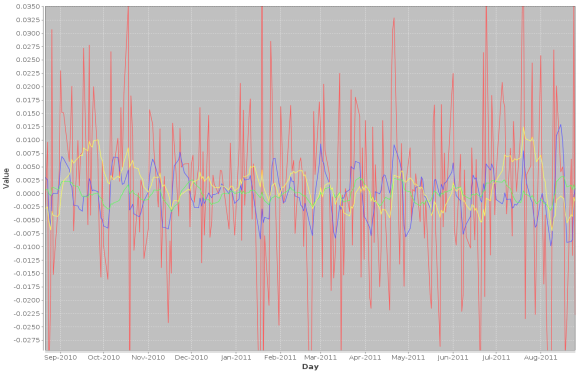

Figure 2: Output of the three signals on the target series (red) AAPL

In the first line, we reference three data sources (AAPL daily open, GOOG daily open, and SPY daily open), where all signals are constructed from the target signal which is by default, the first series referenced in the data market feed. The second two series act as explanatory series. The filter coeffcients are computed using the latest 400 observations, since in this example 400 was used as the insample setSeriesLength, value for all signals. As a side note, different insample values can be used for each signal, which allows one to study the affects of insample data sizes on signal output quality. Figure 2 shows the resulting insample signals created from the latest 400 observations.

We now add 600 more observations out-of-sample, chop off the first 400, and then see how one can change a couple of parameters on the first signal (first MDFABase object).

for(int i = 0; i < 600; i++) {

TimeSeriesEntry observation = marketFeed.getNextMultivariateObservation();

fxSeries.addValue(observation.getDateTime(), observation.getValue());

}

fxSeries.chopFirstObservations(400);

fxSeries.plotSignals("New 400");

/* Now change the lowpass cutoff to PI/6

and the lag to -3.0 in the first signal (index 0) */

fxSeries.getMDFAFactory(0).setLowpassCutoff(Math.PI/6.0);

fxSeries.getMDFAFactory(0).setLag(-3.0);

/* Recompute the filter coefficients with new parameters */

fxSeries.computeFilterCoefficients(0);

fxSeries.plotSignals("Changed first signal");

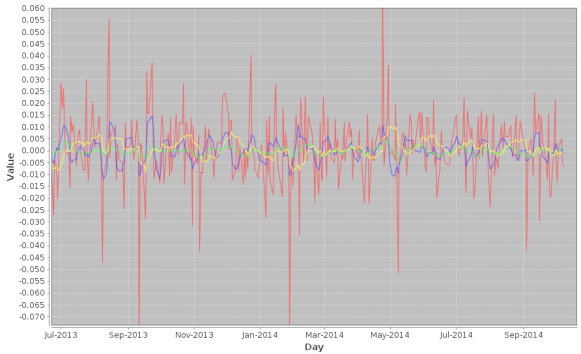

Figure 3: After adding 600 new observations out-of-sample signal values

After adding the 600 values out-of-sample and plotting, we then proceed to change the lowpass cutoff of the first signal to PI/6, and the lag to -3.0 (forecasting three steps ahead). This is done by accessing the MDFAFactory and getting handle on first signal (index 0), and setting the new parameters. The filter coefficients are then recomputed on the newest 400 values (but now all signal values are insample).

In the MDFA-Toolkit, plotting is done using JFreeChart, however iMetricaFX provides an app for building signal extraction pipelines with this toolkit providing the backend where all the automated plotting, analysis, and graphics are handled in JavaFX, creating a much more interactive signal extraction environment. Many more features to the MDFA-Toolkit are being constantly added, especially in regard to features boosting applications in Machine Learning, such as we will see in the next upcoming article.

Big Data analytics in time series

We also implement in MDFA-Toolkit an interface to Apache Spark-TS, which provides a Spark RDD for Time series objects, geared towards high dimension multivariate time series. Large-scale time-series data shows up across a variety of domains. Distributed as the spark-ts package, a library developed by Cloudera’s Data Science team essentially enables analysis of data sets comprising millions of time series, each with millions of measurements. The Spark-TS package runs atop Apache Spark. A tutorial on creating an Apache Spark-TS connection with MDFA-Toolkit is currently being developed.